HeatWave User Guide

Abstract

This document describes how to use HeatWave. It covers how to load data, run queries, optimize analytics workloads,

and use HeatWave machine learning capabilities.

For information about creating and managing a HeatWave Cluster on Oracle Cloud Infrastructure (OCI), see

HeatWave on OCI Service Guide.

For information about creating and managing a HeatWave Cluster on Amazon Web Services (AWS), see HeatWave

on AWS Service Guide.

For information about creating and managing a HeatWave Cluster on Oracle Database Service for Azure (ODSA),

see HeatWave for Azure Service Guide.

For MySQL Server documentation, refer to the MySQL Reference Manual.

For information about the latest HeatWave features and updates, refer to the HeatWave Release Notes.

For legal information, see the Legal Notices.

For help with using MySQL, please visit the MySQL Forums, where you can discuss your issues with other MySQL

users.

Document generated on: 2024-09-02 (revision: 79499)

Table of Contents

Preface and Legal Notices ................................................................................................................. ix

1 Overview ......................................................................................................................................... 1

1.1 HeatWave Architectural Features .......................................................................................... 1

1.2 HeatWave MySQL ................................................................................................................ 3

1.3 HeatWave AutoML ............................................................................................................... 3

1.4 HeatWave GenAI ................................................................................................................. 4

1.5 HeatWave Lakehouse ........................................................................................................... 4

1.6 HeatWave Autopilot .............................................................................................................. 4

1.7 MySQL Functionality for HeatWave ....................................................................................... 7

2 HeatWave MySQL .......................................................................................................................... 9

2.1 Before You Begin ............................................................................................................... 11

2.2 Loading Data to HeatWave MySQL ..................................................................................... 11

2.2.1 Prerequisites ............................................................................................................ 12

2.2.2 Loading Data Manually ............................................................................................ 13

2.2.3 Loading Data Using Auto Parallel Load ..................................................................... 15

2.2.4 Monitoring Load Progress ........................................................................................ 24

2.2.5 Checking Load Status .............................................................................................. 24

2.2.6 Data Compression ................................................................................................... 25

2.2.7 Change Propagation ................................................................................................ 25

2.2.8 Reload Tables ......................................................................................................... 26

2.3 Running Queries ................................................................................................................ 27

2.3.1 Query Prerequisites ................................................................................................. 27

2.3.2 Running Queries ...................................................................................................... 28

2.3.3 Auto Scheduling ...................................................................................................... 29

2.3.4 Auto Query Plan Improvement ................................................................................. 30

2.3.5 Dynamic Query Offload ............................................................................................ 30

2.3.6 Debugging Queries .................................................................................................. 30

2.3.7 Query Runtimes and Estimates ................................................................................ 32

2.3.8 CREATE TABLE ... SELECT Statements .................................................................. 33

2.3.9 INSERT ... SELECT Statements ............................................................................... 33

2.3.10 Using Views ........................................................................................................... 33

2.4 Modifying Tables ................................................................................................................ 34

2.5 Unloading Data from HeatWave MySQL .............................................................................. 35

2.5.1 Unloading Tables ..................................................................................................... 35

2.5.2 Unloading Partitions ................................................................................................. 36

2.5.3 Unloading Data Using Auto Unload .......................................................................... 36

2.5.4 Unload All Tables .................................................................................................... 40

2.6 Table Load and Query Example .......................................................................................... 41

2.7 Workload Optimization for OLAP ......................................................................................... 43

2.7.1 Encoding String Columns ......................................................................................... 43

2.7.2 Defining Data Placement Keys ................................................................................. 45

2.7.3 HeatWave Autopilot Advisor Syntax .......................................................................... 46

2.7.4 Auto Encoding ......................................................................................................... 48

2.7.5 Auto Data Placement ............................................................................................... 51

2.7.6 Auto Query Time Estimation ..................................................................................... 55

2.7.7 Unload Advisor ........................................................................................................ 58

2.7.8 Advisor Command-line Help ..................................................................................... 58

2.7.9 Autopilot Report Table ............................................................................................. 59

2.7.10 Advisor Report Table ............................................................................................. 60

2.8 Workload Optimization for OLTP ......................................................................................... 61

2.8.1 Autopilot Indexing .................................................................................................... 61

iii

HeatWave User Guide

2.9 Best Practices .................................................................................................................... 64

2.9.1 Preparing Data ........................................................................................................ 64

2.9.2 Provisioning ............................................................................................................. 65

2.9.3 Importing Data into the MySQL DB System ............................................................... 66

2.9.4 Inbound Replication ................................................................................................. 66

2.9.5 Loading Data ........................................................................................................... 66

2.9.6 Auto Encoding and Auto Data Placement ................................................................. 68

2.9.7 Running Queries ...................................................................................................... 68

2.9.8 Monitoring ................................................................................................................ 73

2.9.9 Reloading Data ........................................................................................................ 73

2.10 Supported Data Types ...................................................................................................... 74

2.11 Supported SQL Modes ..................................................................................................... 75

2.12 Supported Functions and Operators .................................................................................. 75

2.12.1 Aggregate Functions .............................................................................................. 75

2.12.2 Arithmetic Operators .............................................................................................. 78

2.12.3 Cast Functions and Operators ................................................................................ 79

2.12.4 Comparison Functions and Operators ..................................................................... 79

2.12.5 Control Flow Functions and Operators .................................................................... 80

2.12.6 Data Masking and De-Identification Functions ......................................................... 80

2.12.7 Encryption and Compression Functions ................................................................... 81

2.12.8 JSON Functions ..................................................................................................... 81

2.12.9 Logical Operators ................................................................................................... 82

2.12.10 Mathematical Functions ........................................................................................ 83

2.12.11 String Functions and Operators ............................................................................ 84

2.12.12 Temporal Functions .............................................................................................. 86

2.12.13 Window Functions ................................................................................................ 89

2.13 SELECT Statement ........................................................................................................... 89

2.14 String Column Encoding Reference ................................................................................... 92

2.14.1 Variable-length Encoding ........................................................................................ 92

2.14.2 Dictionary Encoding ............................................................................................... 93

2.14.3 Column Limits ........................................................................................................ 94

2.15 Troubleshooting ................................................................................................................ 94

2.16 Metadata Queries ............................................................................................................. 97

2.16.1 Secondary Engine Definitions ................................................................................. 97

2.16.2 Excluded Columns ................................................................................................. 98

2.16.3 String Column Encoding ......................................................................................... 98

2.16.4 Data Placement ..................................................................................................... 99

2.17 Bulk Ingest Data to MySQL Server .................................................................................. 100

2.18 HeatWave MySQL Limitations ......................................................................................... 102

2.18.1 Change Propagation Limitations ............................................................................ 102

2.18.2 Data Type Limitations ........................................................................................... 102

2.18.3 Functions and Operator Limitations ....................................................................... 103

2.18.4 Index Hint and Optimizer Hint Limitations .............................................................. 105

2.18.5 Join Limitations .................................................................................................... 105

2.18.6 Partition Selection Limitations ............................................................................... 106

2.18.7 Variable Limitations .............................................................................................. 106

2.18.8 Bulk Ingest Data to MySQL Server Limitations ....................................................... 107

2.18.9 Other Limitations .................................................................................................. 108

3 HeatWave AutoML ...................................................................................................................... 111

3.1 HeatWave AutoML Features ............................................................................................. 112

3.1.1 HeatWave AutoML Supervised Learning ................................................................. 112

3.1.2 HeatWave AutoML Ease of Use ............................................................................. 112

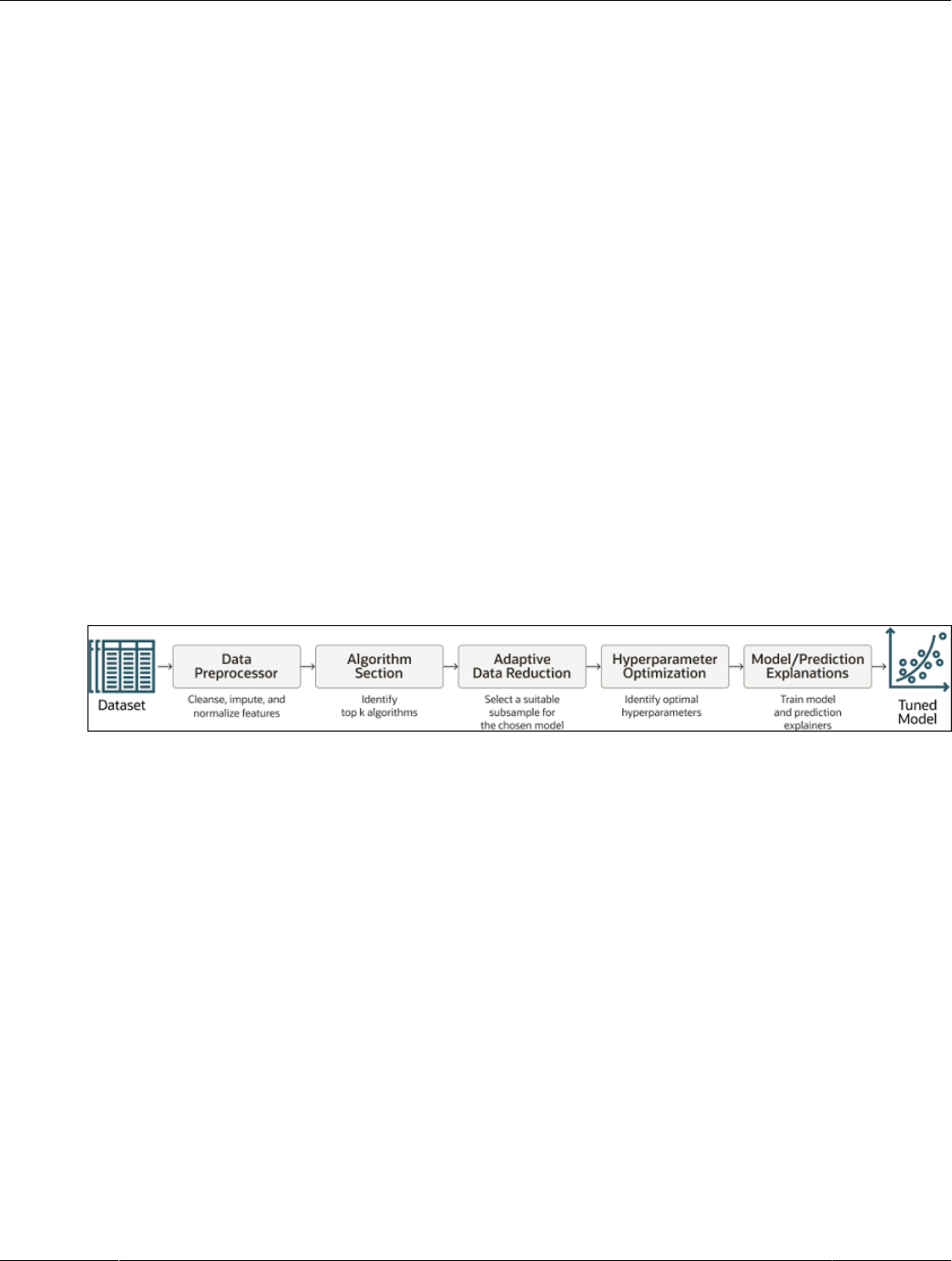

3.1.3 HeatWave AutoML Workflow .................................................................................. 113

3.1.4 Oracle AutoML ....................................................................................................... 114

iv

HeatWave User Guide

3.2 Before You Begin ............................................................................................................. 114

3.3 Getting Started ................................................................................................................. 115

3.4 Preparing Data ................................................................................................................. 115

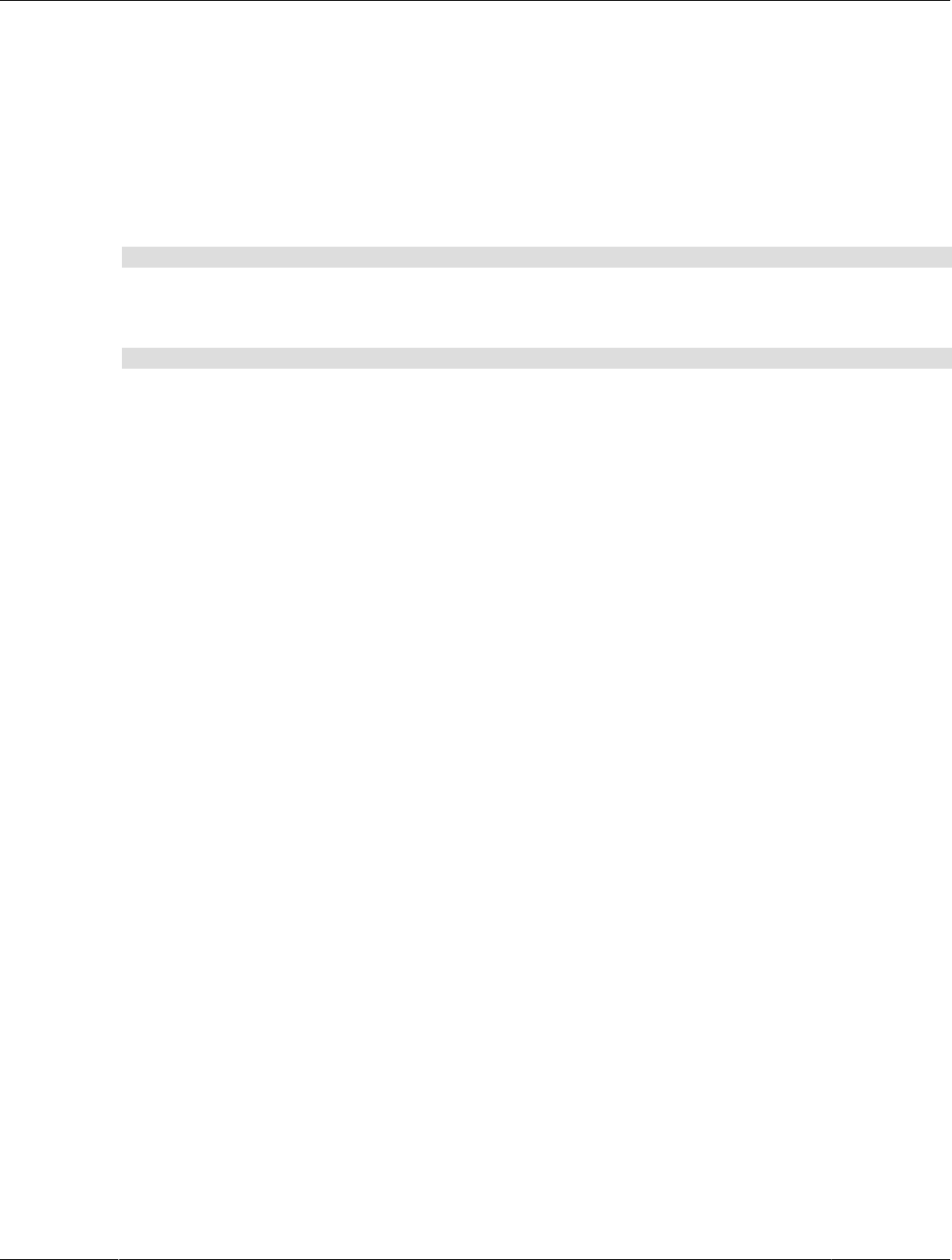

3.4.1 Labeled Data ......................................................................................................... 115

3.4.2 Unlabeled Data ...................................................................................................... 116

3.4.3 General Data Requirements ................................................................................... 117

3.4.4 Example Data ........................................................................................................ 117

3.4.5 Example Text Data ................................................................................................ 119

3.5 Training a Model .............................................................................................................. 119

3.5.1 Advanced ML_TRAIN Options ................................................................................ 120

3.6 Training Explainers ........................................................................................................... 121

3.7 Predictions ....................................................................................................................... 123

3.7.1 Row Predictions ..................................................................................................... 123

3.7.2 Table Predictions ................................................................................................... 124

3.8 Explanations ..................................................................................................................... 124

3.8.1 Row Explanations .................................................................................................. 125

3.8.2 Table Explanations ................................................................................................. 126

3.9 Forecasting ....................................................................................................................... 127

3.9.1 Training a Forecasting Model ................................................................................. 127

3.9.2 Using a Forecasting Model ..................................................................................... 128

3.10 Anomaly Detection .......................................................................................................... 130

3.10.1 Training an Anomaly Detection Model ................................................................... 131

3.10.2 Using an Anomaly Detection Model ...................................................................... 132

3.11 Recommendations .......................................................................................................... 134

3.11.1 Training a Recommendation Model ....................................................................... 135

3.11.2 Using a Recommendation Model .......................................................................... 137

3.12 HeatWave AutoML and Lakehouse .................................................................................. 148

3.13 Managing Models ............................................................................................................ 151

3.13.1 The Model Catalog ............................................................................................... 151

3.13.2 ONNX Model Import ............................................................................................. 156

3.13.3 Loading Models .................................................................................................... 162

3.13.4 Unloading Models ................................................................................................ 163

3.13.5 Viewing Models .................................................................................................... 163

3.13.6 Scoring Models .................................................................................................... 164

3.13.7 Model Explanations .............................................................................................. 165

3.13.8 Model Handles ..................................................................................................... 165

3.13.9 Deleting Models ................................................................................................... 166

3.13.10 Sharing Models .................................................................................................. 166

3.14 Progress tracking ............................................................................................................ 167

3.15 HeatWave AutoML Routines ............................................................................................ 172

3.15.1 ML_TRAIN ........................................................................................................... 172

3.15.2 ML_EXPLAIN ....................................................................................................... 178

3.15.3 ML_MODEL_IMPORT .......................................................................................... 180

3.15.4 ML_PREDICT_ROW ............................................................................................ 181

3.15.5 ML_PREDICT_TABLE .......................................................................................... 185

3.15.6 ML_EXPLAIN_ROW ............................................................................................. 189

3.15.7 ML_EXPLAIN_TABLE .......................................................................................... 191

3.15.8 ML_SCORE ......................................................................................................... 193

3.15.9 ML_MODEL_LOAD .............................................................................................. 195

3.15.10 ML_MODEL_UNLOAD ........................................................................................ 196

3.15.11 Model Types ...................................................................................................... 196

3.15.12 Optimization and Scoring Metrics ........................................................................ 198

3.16 Supported Data Types .................................................................................................... 202

3.17 HeatWave AutoML Error Messages ................................................................................. 203

v

HeatWave User Guide

3.18 HeatWave AutoML Limitations ......................................................................................... 225

4 HeatWave GenAI ........................................................................................................................ 227

4.1 Overview .......................................................................................................................... 227

4.2 Getting Started with HeatWave GenAI ............................................................................... 228

4.2.1 Requirements ........................................................................................................ 228

4.2.2 Quickstart: Setting Up a Help Chat ......................................................................... 229

4.3 Generating Text-Based Content ........................................................................................ 232

4.3.1 Generating New Content ........................................................................................ 232

4.3.2 Summarizing Content ............................................................................................. 233

4.4 Performing a Vector Search .............................................................................................. 234

4.4.1 HeatWave Vector Store Overview ........................................................................... 234

4.4.2 Setting Up a Vector Store ...................................................................................... 234

4.4.3 Updating the Vector Store ...................................................................................... 237

4.4.4 Running Retrieval-Augmented Generation ............................................................... 238

4.5 Running HeatWave Chat ................................................................................................... 241

4.5.1 Running HeatWave GenAI Chat ............................................................................. 241

4.5.2 Viewing Chat Session Details ................................................................................. 242

4.6 HeatWave GenAI Routines ............................................................................................... 243

4.6.1 ML_GENERATE .................................................................................................... 244

4.6.2 VECTOR_STORE_LOAD ....................................................................................... 246

4.6.3 ML_RAG ................................................................................................................ 247

4.6.4 HEATWAVE_CHAT ................................................................................................ 249

4.7 Troubleshooting Issues and Errors .................................................................................... 251

5 HeatWave Lakehouse .................................................................................................................. 255

5.1 Overview .......................................................................................................................... 255

5.1.1 External Tables ...................................................................................................... 256

5.1.2 Lakehouse Engine ................................................................................................. 256

5.1.3 Data Storage ......................................................................................................... 256

5.2 Loading Structured Data to HeatWave Lakehouse .............................................................. 256

5.2.1 Prerequisites .......................................................................................................... 256

5.2.2 Lakehouse External Table Syntax ........................................................................... 257

5.2.3 Loading Data Manually ........................................................................................... 262

5.2.4 Loading Data Using Auto Parallel Load ................................................................... 263

5.2.5 How to Load Data from External Storage Using Auto Parallel Load ........................... 266

5.2.6 Lakehouse Incremental Load .................................................................................. 271

5.3 Loading Unstructured Data to HeatWave Lakehouse .......................................................... 272

5.4 Access Object Storage ..................................................................................................... 272

5.4.1 Pre-Authenticated Requests ................................................................................... 272

5.4.2 Resource Principals ............................................................................................... 273

5.5 External Table Recovery ................................................................................................... 274

5.6 Data Types ....................................................................................................................... 274

5.6.1 Parquet Data Type Conversions ............................................................................. 274

5.7 HeatWave Lakehouse Limitations ...................................................................................... 277

5.7.1 Lakehouse Limitations for all File Formats ............................................................... 277

5.7.2 Lakehouse Limitations for the Avro Format Files ...................................................... 280

5.7.3 Lakehouse Limitations for the CSV File Format ....................................................... 281

5.7.4 Lakehouse Limitations for the JSON File Format ..................................................... 281

5.7.5 Lakehouse Limitations for the Parquet File Format .................................................. 281

5.8 HeatWave Lakehouse Error Messages .............................................................................. 281

6 System and Status Variables ....................................................................................................... 287

6.1 System Variables .............................................................................................................. 287

6.2 Status Variables ............................................................................................................... 291

7 HeatWave Performance and Monitoring ........................................................................................ 295

7.1 HeatWave MySQL Monitoring ........................................................................................... 295

vi

HeatWave User Guide

7.1.1 HeatWave Node Status Monitoring ......................................................................... 295

7.1.2 HeatWave Memory Usage Monitoring ..................................................................... 296

7.1.3 Data Load Progress and Status Monitoring ............................................................. 296

7.1.4 Change Propagation Monitoring .............................................................................. 297

7.1.5 Query Execution Monitoring .................................................................................... 298

7.1.6 Query History and Statistics Monitoring ................................................................... 299

7.1.7 Scanned Data Monitoring ....................................................................................... 300

7.2 HeatWave AutoML Monitoring ........................................................................................... 301

7.3 HeatWave Performance Schema Tables ............................................................................ 302

7.3.1 The rpd_column_id Table ....................................................................................... 302

7.3.2 The rpd_columns Table .......................................................................................... 303

7.3.3 The rpd_exec_stats Table ...................................................................................... 303

7.3.4 The rpd_nodes Table ............................................................................................. 304

7.3.5 The rpd_preload_stats Table .................................................................................. 305

7.3.6 The rpd_query_stats Table ..................................................................................... 306

7.3.7 The rpd_table_id Table .......................................................................................... 307

7.3.8 The rpd_tables Table ............................................................................................. 307

8 HeatWave Quickstarts ................................................................................................................. 311

8.1 HeatWave Quickstart Prerequisites .................................................................................... 311

8.2 tpch Analytics Quickstart ................................................................................................... 311

8.3 AirportDB Analytics Quickstart ........................................................................................... 320

8.4 Iris Data Set Machine Learning Quickstart ......................................................................... 324

vii

viii

Preface and Legal Notices

This is the user manual for HeatWave.

Legal Notices

Copyright © 1997, 2024, Oracle and/or its affiliates.

License Restrictions

This software and related documentation are provided under a license agreement containing restrictions

on use and disclosure and are protected by intellectual property laws. Except as expressly permitted

in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast,

modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any

means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for

interoperability, is prohibited.

Warranty Disclaimer

The information contained herein is subject to change without notice and is not warranted to be error-free.

If you find any errors, please report them to us in writing.

Restricted Rights Notice

If this is software, software documentation, data (as defined in the Federal Acquisition Regulation), or

related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S.

Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated

software, any programs embedded, installed, or activated on delivered hardware, and modifications

of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed

by U.S. Government end users are "commercial computer software," "commercial computer software

documentation," or "limited rights data" pursuant to the applicable Federal Acquisition Regulation and

agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display,

disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including

any operating system, integrated software, any programs embedded, installed, or activated on delivered

hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle

data, is subject to the rights and limitations specified in the license contained in the applicable contract.

The terms governing the U.S. Government's use of Oracle cloud services are defined by the applicable

contract for such services. No other rights are granted to the U.S. Government.

Hazardous Applications Notice

This software or hardware is developed for general use in a variety of information management

applications. It is not developed or intended for use in any inherently dangerous applications, including

applications that may create a risk of personal injury. If you use this software or hardware in dangerous

applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and other

measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any damages

caused by use of this software or hardware in dangerous applications.

Trademark Notice

Oracle, Java, MySQL, and NetSuite are registered trademarks of Oracle and/or its affiliates. Other names

may be trademarks of their respective owners.

ix

Documentation Accessibility

Intel and Intel Inside are trademarks or registered trademarks of Intel Corporation. All SPARC trademarks

are used under license and are trademarks or registered trademarks of SPARC International, Inc. AMD,

Epyc, and the AMD logo are trademarks or registered trademarks of Advanced Micro Devices. UNIX is a

registered trademark of The Open Group.

Third-Party Content, Products, and Services Disclaimer

This software or hardware and documentation may provide access to or information about content,

products, and services from third parties. Oracle Corporation and its affiliates are not responsible for and

expressly disclaim all warranties of any kind with respect to third-party content, products, and services

unless otherwise set forth in an applicable agreement between you and Oracle. Oracle Corporation and its

affiliates will not be responsible for any loss, costs, or damages incurred due to your access to or use of

third-party content, products, or services, except as set forth in an applicable agreement between you and

Oracle.

Use of This Documentation

This documentation is NOT distributed under a GPL license. Use of this documentation is subject to the

following terms:

You may create a printed copy of this documentation solely for your own personal use. Conversion to other

formats is allowed as long as the actual content is not altered or edited in any way. You shall not publish

or distribute this documentation in any form or on any media, except if you distribute the documentation in

a manner similar to how Oracle disseminates it (that is, electronically for download on a Web site with the

software) or on a CD-ROM or similar medium, provided however that the documentation is disseminated

together with the software on the same medium. Any other use, such as any dissemination of printed

copies or use of this documentation, in whole or in part, in another publication, requires the prior written

consent from an authorized representative of Oracle. Oracle and/or its affiliates reserve any and all rights

to this documentation not expressly granted above.

Documentation Accessibility

For information about Oracle's commitment to accessibility, visit the Oracle Accessibility Program website

at

http://www.oracle.com/pls/topic/lookup?ctx=acc&id=docacc.

Access to Oracle Support for Accessibility

Oracle customers that have purchased support have access to electronic support through My Oracle

Support. For information, visit

http://www.oracle.com/pls/topic/lookup?ctx=acc&id=info or visit http://www.oracle.com/pls/topic/

lookup?ctx=acc&id=trs if you are hearing impaired.

x

Chapter 1 Overview

Table of Contents

1.1 HeatWave Architectural Features .................................................................................................. 1

1.2 HeatWave MySQL ........................................................................................................................ 3

1.3 HeatWave AutoML ....................................................................................................................... 3

1.4 HeatWave GenAI ......................................................................................................................... 4

1.5 HeatWave Lakehouse ................................................................................................................... 4

1.6 HeatWave Autopilot ...................................................................................................................... 4

1.7 MySQL Functionality for HeatWave ............................................................................................... 7

HeatWave is a massively parallel, high performance, in-memory query accelerator that accelerates MySQL

performance by orders of magnitude for analytics workloads, mixed workloads, and machine learning.

HeatWave can be accessed through Oracle Cloud Infrastructure (OCI), Amazon Web Services (AWS), and

Oracle Database Service for Azure (ODSA).

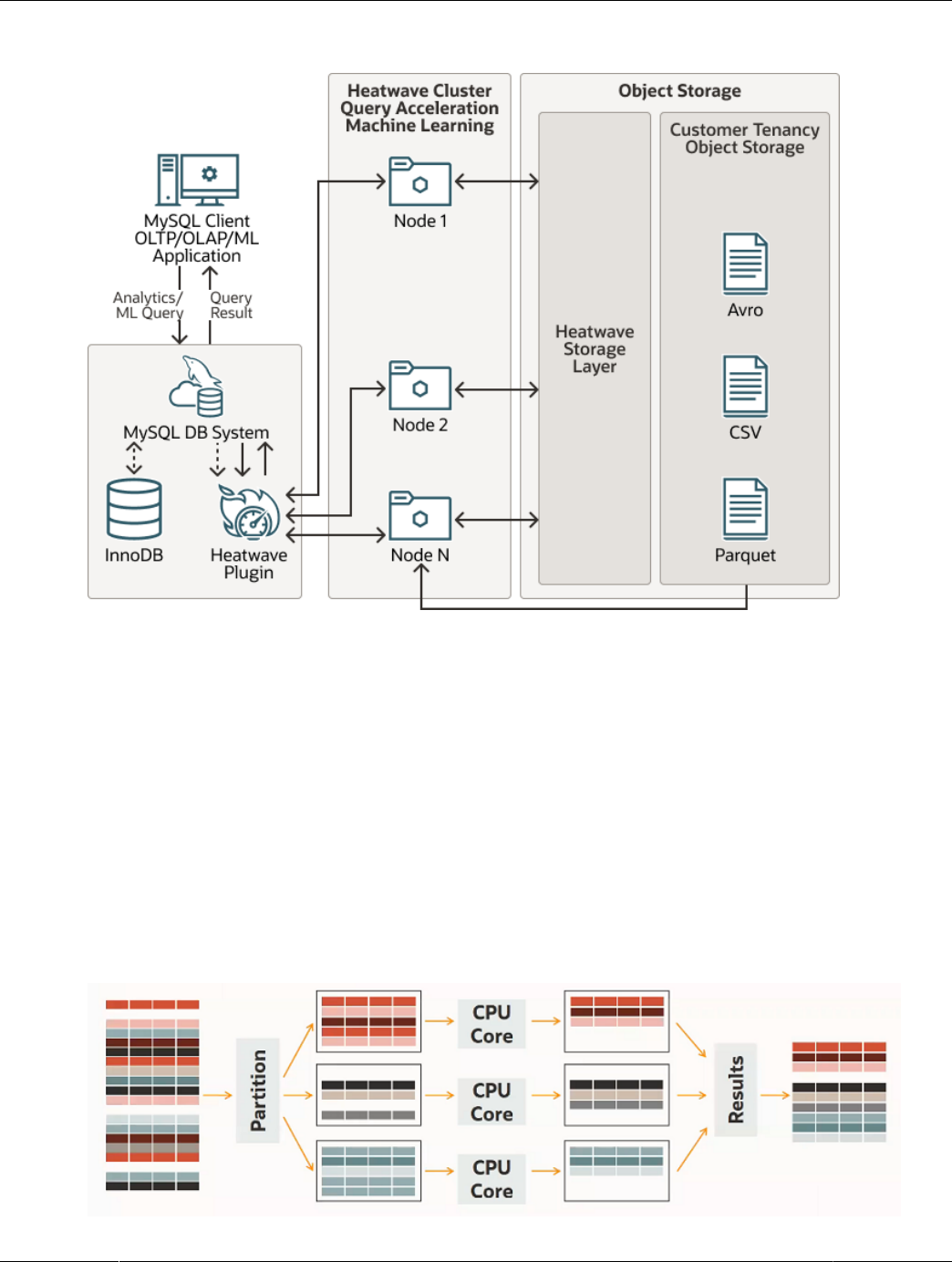

HeatWave consists of a MySQL DB System and HeatWave nodes. Analytics queries that meet certain

prerequisites are automatically offloaded from the MySQL DB System to the HeatWave Cluster for

accelerated processing. With a HeatWave Cluster, you can run online transaction processing (OLTP),

online analytical processing (OLAP), and mixed workloads from the same MySQL database without

requiring extract, transfer, and load (ETL), and without modifying your applications. For more information

about the analytical capabilities of HeatWave, see Chapter 2, HeatWave MySQL.

The MySQL DB System includes a HeatWave plugin that is responsible for cluster management, query

scheduling, and returning query results to the MySQL DB System. The HeatWave nodes store data in

memory and process analytics and machine learning queries. Each HeatWave node hosts an instance of

the HeatWave query processing engine (RAPID).

Enabling a HeatWave Cluster also provides access to HeatWave AutoML, which is a fully managed,

highly scalable, cost-efficient, machine learning solution for data stored in MySQL. HeatWave AutoML

provides a simple SQL interface for training and using predictive machine learning models, which can be

used by novice and experienced ML practitioners alike. Machine learning expertise, specialized tools, and

algorithms are not required. With HeatWave AutoML, you can train a model with a single call to an SQL

routine. Similarly, you can generate predictions with a single CALL or SELECT statement which can be

easily integrated with your applications.

1.1 HeatWave Architectural Features

The HeatWave architecture supports OLTP, OLAP and machine learning.

1

In-Memory Hybrid-Columnar Format

Figure 1.1 HeatWave Architecture

In-Memory Hybrid-Columnar Format

HeatWave stores data in main memory in a hybrid columnar format. The HeatWave hybrid approach

achieves the benefits of columnar format for query processing, while avoiding the materialization and

update costs associated with pure columnar format. Hybrid columnar format enables the use of efficient

query processing algorithms designed to operate on fixed-width data, and permits vectorized query

processing.

Massively Parallel Architecture

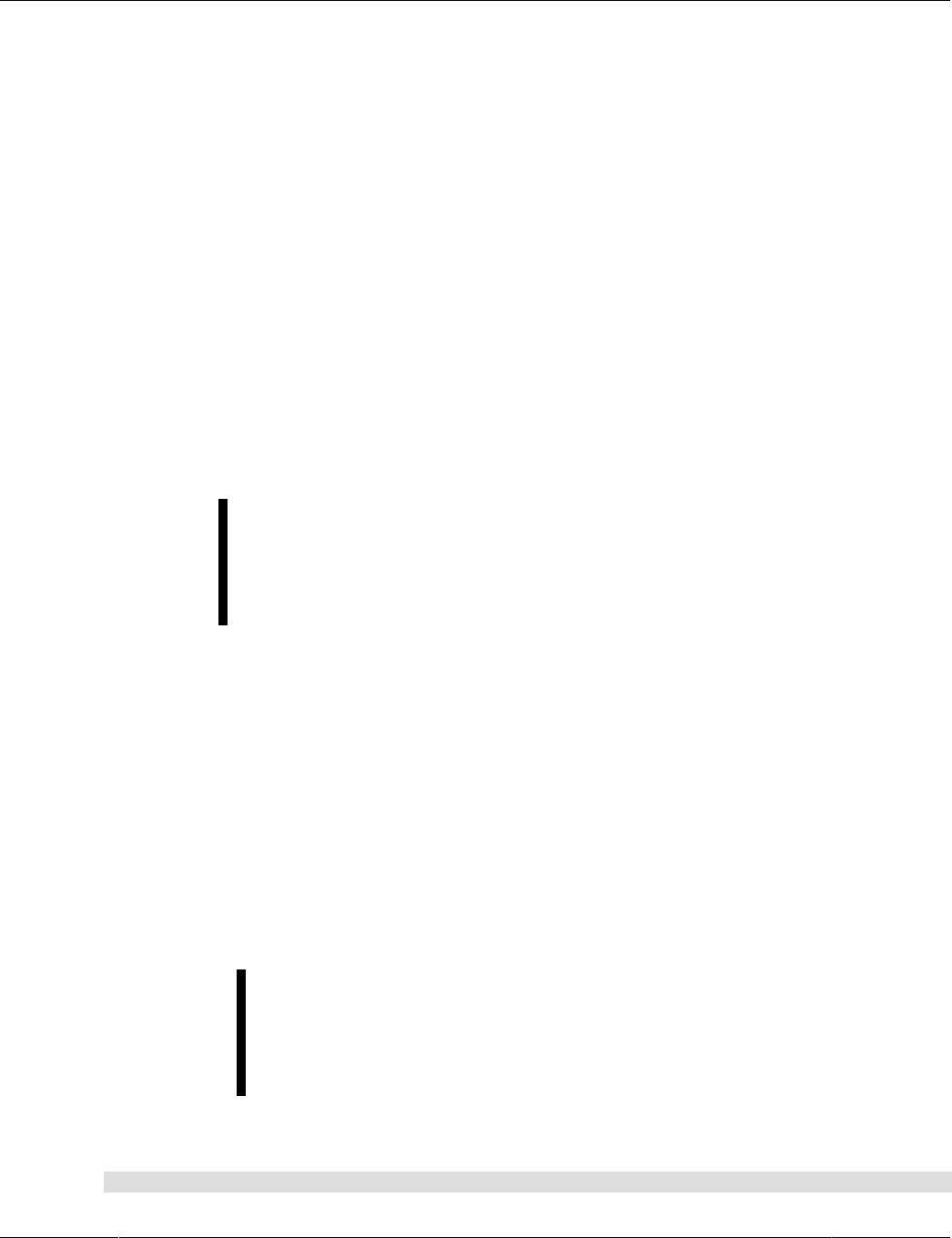

The HeatWave massively parallel architecture uses internode and intranode partitioning of data. Each

node within a HeatWave Cluster, and each CPU core within a node, processes the partitioned data in

parallel. HeatWave is capable of scaling to thousands of cores. This massively parallel architecture,

combined with high-fanout, workload-aware partitioning, accelerates query processing.

Figure 1.2 HeatWave Massively Parallel Architecture

2

Push-Based Vectorized Query Processing

Push-Based Vectorized Query Processing

HeatWave processes queries by pushing vector blocks (slices of columnar data) through the query

execution plan from one operator to another. A push-based execution model avoids deep call stacks and

saves valuable resources compared to tuple-based processing models.

Scale-Out Data Management

When analytics data is loaded into HeatWave, the HeatWave Storage Layer automatically persists the

data for pause and resume of the HeatWave Cluster and for fast recovery in case of a HeatWave node or

cluster failure. Data is automatically restored by the HeatWave Storage Layer when the HeatWave Cluster

resumes after a pause or recovers a failed node or cluster. This automated, self-managing storage layer

scales to the size required for your HeatWave Cluster and operates independently in the background.

HeatWave on OCI persists the data to OCI Object Storage. HeatWave on AWS persists the data to AWS

S3.

Native MySQL Integration

Native integration with MySQL provides a single data management platform for OLTP, OLAP, mixed

workloads, and machine learning. HeatWave is designed as a pluggable MySQL storage engine, which

enables management of both the MySQL and HeatWave using the same interfaces.

Changes to analytics data on the MySQL DB System are automatically propagated to HeatWave nodes

in real time, which means that queries always have access to the latest data. Change propagation is

performed automatically by a light-weight algorithm.

Users and applications interact with HeatWave through the MySQL DB System using standard tools and

standard-based ODBC/JDBC connectors. HeatWave supports the same ANSI SQL standard and ACID

properties as MySQL and the most commonly used data types. This support enables existing applications

to use HeatWave without modification, allowing for quick and easy integration.

1.2 HeatWave MySQL

Analytics and machine learning queries are issued from a MySQL client or application that interacts with

the HeatWave Cluster by connecting to the MySQL DB System. Results are returned to the MySQL DB

System and to the MySQL client or application that issued the query.

The number of HeatWave nodes required depends on data size and the amount of compression that is

achieved when loading data into the HeatWave Cluster. A HeatWave Cluster in Oracle Cloud Infrastructure

(OCI) or Oracle Database Service for Azure (ODSA) supports up to 64 nodes. On Amazon Web Services

(AWS), a HeatWave Cluster supports up to 128 nodes.

On Oracle Cloud Infrastructure (OCI), data that is loaded into HeatWave is automatically persisted to OCI

Object Storage, which allows data to be reloaded quickly when the HeatWave Cluster resumes after a

pause or when the HeatWave Cluster recovers from a cluster or node failure.

HeatWave network traffic is fully encrypted.

1.3 HeatWave AutoML

With HeatWave AutoML, data and models never leave HeatWave, saving you time and effort while

keeping your data and models secure. HeatWave AutoML is optimized for HeatWave shapes and scaling,

and all HeatWave AutoML processing is performed on the HeatWave Cluster. HeatWave distributes

ML computation among HeatWave nodes, to take advantage of the scalability and massively parallel

3

HeatWave GenAI

processing capabilities of HeatWave. For more information about the machine learning capabilities of

HeatWave, see Chapter 3, HeatWave AutoML.

1.4 HeatWave GenAI

The HeatWave GenAI feature of HeatWave lets you communicate with unstructured data in HeatWave

using natural language queries. It uses large language models (LLMs) to enable natural language

communication and provides an inbuilt vector store that you can use to store enterprise-specific proprietary

content to perform vector searches. HeatWave GenAI also includes HeatWave Chat which is a chatbot

that extends the generative AI and vector search functionalities to let you ask multiple follow-up questions

about a topic in a single session. HeatWave Chat can even draw its knowledge from documents ingested

by the vector store.

See: Chapter 4, HeatWave GenAI.

1.5 HeatWave Lakehouse

The Lakehouse feature of HeatWave enables query processing on data resident in Object Storage. The

source data is read from Object Storage, transformed to the memory optimized HeatWave format, stored in

the HeatWave persistence storage layer in Object Storage, and then loaded to HeatWave cluster memory.

• Provides in-memory query processing on data resident in Object Storage.

• Data is not loaded into the MySQL InnoDB storage layer.

• Supports structured and semi-structured relational data in the following file formats:

• Avro.

• CSV.

• JSON.

As of MySQL 8.4.0, Lakehouse supports Newline Delimited JSON.

• Parquet.

• With this feature, users can now analyse data in both InnoDB and an object store using familiar SQL

syntax in the same query.

See: Chapter 5, HeatWave Lakehouse. To use Lakehouse with HeatWave AutoML, see: Section 3.12,

“HeatWave AutoML and Lakehouse”.

1.6 HeatWave Autopilot

HeatWave Autopilot automates many of the most important and often challenging aspects of achieving

exceptional query performance at scale, including cluster provisioning, loading data, query processing, and

failure handling. It uses advanced techniques to sample data, collect statistics on data and queries, and

build machine learning models to model memory usage, network load, and execution time. The machine

learning models are used by HeatWave Autopilot to execute its core capabilities. HeatWave Autopilot

makes the HeatWave query optimizer increasingly intelligent as more queries are executed, resulting in

continually improving system performance.

System Setup

• Auto Provisioning

4

Data Load

Estimates the number of HeatWave nodes required by sampling the data, which means that manual

cluster size estimations are not necessary.

• For HeatWave on OCI, see Generating a Node Count Estimate in the HeatWave on OCI Service

Guide.

• For HeatWave on AWS, see Estimating Cluster Size with HeatWave Autopilot in the HeatWave on

AWS Service Guide.

• For HeatWave for Azure, see Provisioning HeatWave Nodes in the HeatWave for Azure Service

Guide.

• Auto Shape Prediction

For HeatWave on AWS, the Auto Shape Prediction feature in HeatWave Autopilot uses MySQL statistics

for the workload to assess the suitability of the current shape. Auto Shape Prediction provides prompts

to upsize the shape and improve system performance, or to downsize the shape if the system is under-

utilized. See: Autopilot Shape Advisor in the HeatWave on AWS Service Guide.

Data Load

• Auto Parallel Load

Optimizes load time and memory usage by predicting the optimal degree of parallelism for each table

loaded into HeatWave. See: Section 2.2.3, “Loading Data Using Auto Parallel Load”.

• Auto Schema Inference

Lakehouse Auto Parallel Load extends Auto Parallel Load with Auto Schema Inference that can analyze

the data, infer the external table structure, and create the database and all tables. It can also use header

information from the external files to define the column names. See: Section 5.2.4.1, “Lakehouse Auto

Parallel Load Schema Inference”.

• Autopilot Indexing

Autopilot Indexing can make secondary index suggestions to improve workload performance. See:

Section 2.8.1, “Autopilot Indexing”

• Auto Encoding

Determines the optimal encoding for string column data, which minimizes the required cluster size and

improves query performance. See: Section 2.7.4, “Auto Encoding”.

• Auto Data Placement

Recommends how tables should be partitioned in memory to achieve the best query performance, and

estimates the expected performance improvement. See: Section 2.7.5, “Auto Data Placement”.

• Auto Data Compression

HeatWave and HeatWave Lakehouse can compress data stored in memory using different compression

algorithms. To minimize memory usage while providing the best query performance, auto compression

dynamically determines the compression algorithm to use for each column based on its data

characteristics. Auto compression employs an adaptive sampling technique during the data loading

process, and automatically selects the optimal compression algorithm without user intervention.

Algorithm selection is based on the compression ratio and the compression and decompression rates,

5

Query Execution

which balance the memory needed to store the data in HeatWave with query execution time. See:

Section 2.2.6, “Data Compression”.

• Unload Advisor

Unload Advisor can recommend tables to unload to reduce HeatWave memory usage. See:

Section 2.7.7, “Unload Advisor”.

• Auto Unload

Auto Unload can automate the process of unloading data from HeatWave. See: Section 2.5.3,

“Unloading Data Using Auto Unload”.

Query Execution

• Auto Query Plan Improvement

Collects previously executed queries and uses them to improve future query execution plans. See:

Section 2.3.4, “Auto Query Plan Improvement”.

• Adaptive Query Execution

Adaptive query optimization automatically improves query performance and memory consumption, and

mitigates skew-related performance issues as well as out of memory errors. It uses various statistics

to adjust data structures and system resources after query execution has started. It independently

optimizes query execution for each HeatWave node based on actual data distribution at runtime. This

helps improve the performance of ad hoc queries by up to 25%. The HeatWave optimizer generates a

physical query plan based on statistics collected by Autopilot. During query execution, each HeatWave

node executes the same query plan. With adaptive query execution, each individual HeatWave

node adjusts the local query plan based on statistics such as cardinality and distinct value counts of

intermediate relations collected locally in real-time. This allows each HeatWave node to tailor the data

structures that it needs, resulting in better query execution time, lower memory usage, and improved

data skew-related performance.

• Auto Query Time Estimation

Estimates query execution time to determine how a query might perform without having to run the query.

See: Section 2.7.6, “Auto Query Time Estimation”.

• Auto Change Propagation

Auto Change Propagation intelligently determines the optimal time when changes to data on the MySQL

DB System should be propagated to the HeatWave Storage Layer. Only available for HeatWave on OCI.

• Auto Scheduling

Prioritizes queries in an intelligent way to reduce overall query execution wait times. See: Section 2.3.3,

“Auto Scheduling”.

• Auto Thread Pooling

Provides sustained throughput during high transaction concurrency. Where multiple clients are

running queries concurrently, Auto Thread Pooling applies workload-aware admission control

to eliminate resource contention caused by too many waiting transactions. Auto Thread Pooling

automatically manages the settings for the thread pool control variables thread_pool_size,

thread_pool_max_transactions_limit, and thread_pool_query_threads_per_group. For

details of how the thread pool works, see Thread Pool Operation.

6

Failure Handling

Failure Handling

• Auto Error Recovery

For HeatWave on OCI, Auto Error Recovery recovers a failed node or provisions a new one and reloads

data from the HeatWave storage layer when a HeatWave node becomes unresponsive due to a software

or hardware failure. See: HeatWave Cluster Failure and Recovery in the HeatWave on OCI Service

Guide.

For HeatWave on AWS, Auto Error Recovery recovers a failed node and reloads data from the MySQL

DB System when a HeatWave node becomes unresponsive due to a software failure.

1.7 MySQL Functionality for HeatWave

The following items provide additional functionality for MySQL that is only available with HeatWave

MySQL:

• Section 2.17, “Bulk Ingest Data to MySQL Server”.

• See Section 2.12.1, “Aggregate Functions” for the HLL() function.

• See Section 2.12.1.1, “GROUP BY Modifiers” for the CUBE modifier.

• See Section 2.13, “SELECT Statement” for the QUALIFY and TABLESAMPLE clauses.

7

8

Chapter 2 HeatWave MySQL

Table of Contents

2.1 Before You Begin ....................................................................................................................... 11

2.2 Loading Data to HeatWave MySQL ............................................................................................. 11

2.2.1 Prerequisites .................................................................................................................... 12

2.2.2 Loading Data Manually .................................................................................................... 13

2.2.3 Loading Data Using Auto Parallel Load ............................................................................. 15

2.2.4 Monitoring Load Progress ................................................................................................ 24

2.2.5 Checking Load Status ...................................................................................................... 24

2.2.6 Data Compression ........................................................................................................... 25

2.2.7 Change Propagation ........................................................................................................ 25

2.2.8 Reload Tables ................................................................................................................. 26

2.3 Running Queries ........................................................................................................................ 27

2.3.1 Query Prerequisites ......................................................................................................... 27

2.3.2 Running Queries .............................................................................................................. 28

2.3.3 Auto Scheduling .............................................................................................................. 29

2.3.4 Auto Query Plan Improvement ......................................................................................... 30

2.3.5 Dynamic Query Offload .................................................................................................... 30

2.3.6 Debugging Queries .......................................................................................................... 30

2.3.7 Query Runtimes and Estimates ........................................................................................ 32

2.3.8 CREATE TABLE ... SELECT Statements .......................................................................... 33

2.3.9 INSERT ... SELECT Statements ....................................................................................... 33

2.3.10 Using Views ................................................................................................................... 33

2.4 Modifying Tables ........................................................................................................................ 34

2.5 Unloading Data from HeatWave MySQL ...................................................................................... 35

2.5.1 Unloading Tables ............................................................................................................. 35

2.5.2 Unloading Partitions ......................................................................................................... 36

2.5.3 Unloading Data Using Auto Unload .................................................................................. 36

2.5.4 Unload All Tables ............................................................................................................ 40

2.6 Table Load and Query Example .................................................................................................. 41

2.7 Workload Optimization for OLAP ................................................................................................. 43

2.7.1 Encoding String Columns ................................................................................................. 43

2.7.2 Defining Data Placement Keys ......................................................................................... 45

2.7.3 HeatWave Autopilot Advisor Syntax .................................................................................. 46

2.7.4 Auto Encoding ................................................................................................................. 48

2.7.5 Auto Data Placement ....................................................................................................... 51

2.7.6 Auto Query Time Estimation ............................................................................................. 55

2.7.7 Unload Advisor ................................................................................................................ 58

2.7.8 Advisor Command-line Help ............................................................................................. 58

2.7.9 Autopilot Report Table ..................................................................................................... 59

2.7.10 Advisor Report Table ..................................................................................................... 60

2.8 Workload Optimization for OLTP ................................................................................................. 61

2.8.1 Autopilot Indexing ............................................................................................................ 61

2.9 Best Practices ............................................................................................................................ 64

2.9.1 Preparing Data ................................................................................................................ 64

2.9.2 Provisioning ..................................................................................................................... 65

2.9.3 Importing Data into the MySQL DB System ...................................................................... 66

2.9.4 Inbound Replication ......................................................................................................... 66

2.9.5 Loading Data ................................................................................................................... 66

9

2.9.6 Auto Encoding and Auto Data Placement ......................................................................... 68

2.9.7 Running Queries .............................................................................................................. 68

2.9.8 Monitoring ....................................................................................................................... 73

2.9.9 Reloading Data ................................................................................................................ 73

2.10 Supported Data Types .............................................................................................................. 74

2.11 Supported SQL Modes ............................................................................................................. 75

2.12 Supported Functions and Operators .......................................................................................... 75

2.12.1 Aggregate Functions ...................................................................................................... 75

2.12.2 Arithmetic Operators ...................................................................................................... 78

2.12.3 Cast Functions and Operators ........................................................................................ 79

2.12.4 Comparison Functions and Operators ............................................................................. 79

2.12.5 Control Flow Functions and Operators ............................................................................ 80

2.12.6 Data Masking and De-Identification Functions ................................................................. 80

2.12.7 Encryption and Compression Functions .......................................................................... 81

2.12.8 JSON Functions ............................................................................................................. 81

2.12.9 Logical Operators ........................................................................................................... 82

2.12.10 Mathematical Functions ................................................................................................ 83

2.12.11 String Functions and Operators .................................................................................... 84

2.12.12 Temporal Functions ...................................................................................................... 86

2.12.13 Window Functions ........................................................................................................ 89

2.13 SELECT Statement ................................................................................................................... 89

2.14 String Column Encoding Reference ........................................................................................... 92

2.14.1 Variable-length Encoding ................................................................................................ 92

2.14.2 Dictionary Encoding ....................................................................................................... 93

2.14.3 Column Limits ................................................................................................................ 94

2.15 Troubleshooting ........................................................................................................................ 94

2.16 Metadata Queries ..................................................................................................................... 97

2.16.1 Secondary Engine Definitions ......................................................................................... 97

2.16.2 Excluded Columns ......................................................................................................... 98

2.16.3 String Column Encoding ................................................................................................. 98

2.16.4 Data Placement ............................................................................................................. 99

2.17 Bulk Ingest Data to MySQL Server .......................................................................................... 100

2.18 HeatWave MySQL Limitations ................................................................................................. 102

2.18.1 Change Propagation Limitations ................................................................................... 102

2.18.2 Data Type Limitations ................................................................................................... 102

2.18.3 Functions and Operator Limitations ............................................................................... 103

2.18.4 Index Hint and Optimizer Hint Limitations ...................................................................... 105

2.18.5 Join Limitations ............................................................................................................ 105

2.18.6 Partition Selection Limitations ....................................................................................... 106

2.18.7 Variable Limitations ...................................................................................................... 106

2.18.8 Bulk Ingest Data to MySQL Server Limitations ............................................................... 107

2.18.9 Other Limitations .......................................................................................................... 108

When a HeatWave Cluster is enabled, queries that meet certain prerequisites are automatically offloaded

from the MySQL DB System to the HeatWave Cluster for accelerated processing.

Queries are issued from a MySQL client or application that interacts with the HeatWave Cluster by

connecting to the MySQL DB System. Results are returned to the MySQL DB System and to the MySQL

client or application that issued the query.

Manually loading data into HeatWave involves preparing tables on the MySQL DB System and executing

load statements. See Section 2.2.2, “Loading Data Manually”. The Auto Parallel Load utility facilitates the

process of loading data by automating required steps and optimizing the number of parallel load threads.

See Section 2.2.3, “Loading Data Using Auto Parallel Load”.

10

Before You Begin

For HeatWave on AWS, load data into HeatWave using the HeatWave Console. See Manage Data in

HeatWave with Workspaces in the HeatWave on AWS Service Guide.

For HeatWave for Azure, see Importing Data to HeatWave in the HeatWave for Azure Service Guide.

When HeatWave loads a table, the data is sharded and distributed among HeatWave nodes. After a table

is loaded, DML operations on the tables are automatically propagated to the HeatWave nodes. No user

action is required to synchronize data. For more information, see Section 2.2.7, “Change Propagation”.

On Oracle Cloud Infrastructure, OCI, data loaded into HeatWave, including propagated changes,

automatically persists in the HeatWave Storage Layer to OCI Object Storage for fast recovery in case of a

HeatWave node or cluster failure. For HeatWave on AWS, data is recovered from the MySQL DB System.

After running a number of queries, use the HeatWave Autopilot Advisor to optimize the workload.

Advisor analyzes the data and query history to provide string column encoding and data placement

recommendations. See Section 2.7, “Workload Optimization for OLAP”.

2.1 Before You Begin

Before you begin using HeatWave, the following is assumed:

• An operational MySQL DB System, and able to connect to it using a MySQL client. If not, refer to the

following procedures:

• For HeatWave on OCI, see Creating a DB System, and Connecting to a DB System in the HeatWave

on OCI Service Guide in the HeatWave on OCI Service Guide.

• For HeatWave on AWS, see Creating a DB System, and Connecting from a Client in the HeatWave on

AWS Service Guide.

• For HeatWave for Azure, see Provisioning HeatWave and Connecting to HeatWave in the HeatWave

for Azure Service Guide.

• The MySQL DB System has an operational HeatWave Cluster. If not, refer to the following procedures:

• For HeatWave on OCI, see Adding a HeatWave Cluster in the HeatWave on OCI Service Guide.

• For HeatWave on AWS, see Creating a HeatWave Cluster in the HeatWave on AWS Service Guide.

• For HeatWave for Azure, see Provisioning HeatWave in the HeatWave for Azure Service Guide.

2.2 Loading Data to HeatWave MySQL

This section describes how to load data into HeatWave. The following methods are supported:

• Loading data manually. This method loads one table at a time and involves executing multiple

statements for each table. See Section 2.2.2, “Loading Data Manually”.

• Loading data using Auto Parallel Load. This HeatWave Autopilot enabled method loads one or more

schemas at a time and facilitates loading by automating manual steps and optimizing the number of

parallel load threads for a faster load. See Section 2.2.3, “Loading Data Using Auto Parallel Load”.

• For users of HeatWave on AWS, load data using the HeatWave Console. This GUI-based and

HeatWave Autopilot enabled method loads selected schemas and tables using an optimized number of

parallel load threads for a faster load. See Manage Data in HeatWave with Workspaces in the HeatWave

on AWS Service Guide.

11

Prerequisites

HeatWave loads data with batched, multi-threaded reads from InnoDB. HeatWave then converts the data

into columnar format and sends it over the network to distribute it among HeatWave nodes in horizontal

slices. HeatWave partitions data by the table primary key, unless the table definition includes data

placement keys. See Section 2.7.2, “Defining Data Placement Keys”.

Concurrent DML operations and queries on the MySQL node are supported while a data load operation

is in progress; however, concurrent operations on the MySQL node can affect load performance and vice

versa.

After tables are loaded, changes to table data on the MySQL DB System node are automatically

propagated to HeatWave. For more information, see Section 2.2.7, “Change Propagation”.

For each table that is loaded in HeatWave, 4MB of memory (the default heap segment size) is allocated

from the root heap. This memory requirement should be considered when loading a large number of

tables. For example, with a root heap of approximately 400GB available to HeatWave, loading 100K tables

would consume all available root heap memory (100K x 4GB = 400GB). As of MySQL 8.0.30, the default

heap segment size is reduced from 4MB to a default of 64KB per table, reducing the amount of memory

that must be allocated from the root heap for each loaded table.

As of MySQL 8.0.28-u1, HeatWave compresses data as it is loaded, which permits HeatWave nodes to

store more data at a minor cost to performance. If you do not want to compress data as it is loaded in

HeatWave, you must disable compression before loading data. See Section 2.2.6, “Data Compression”.

Note

Before MySQL 8.0.31, DDL operations are not permitted on tables that are loaded

in HeatWave. In those releases, to alter the definition of a table, you must unload

the table and remove the SECONDARY_ENGINE attribute before performing the DDL

operation. See Section 2.4, “Modifying Tables”.

For related best practices, see Section 2.9, “Best Practices”.

2.2.1 Prerequisites

Before loading data, ensure that you have met the following prerequisites:

• The data you want to load must be available on the MySQL DB System. For information about importing

data into a MySQL DB System, refer to the following instructions:

• For HeatWave on OCI, see Importing and Exporting Databases in the HeatWave on OCI Service

Guide.

• For HeatWave on AWS, see Importing Data in the HeatWave on AWS Service Guide.

• For HeatWave for Azure, see Importing Data to HeatWave in the HeatWave for Azure Service Guide.

Tip

For an OLTP workload that resides in an on-premise instance of MySQL Server,

inbound replication is recommended for replicating data to the MySQL DB

System for offload to the HeatWave Cluster. See Section 2.9, “Best Practices”

and Replication in the HeatWave on OCI Service Guide.

• The tables you intend to load must be InnoDB tables. You can manually convert tables to InnoDB using

the following ALTER TABLE statement:

mysql> ALTER TABLE tbl_name ENGINE=InnoDB;

12

Loading Data Manually

• The tables you intend to load must be defined with a primary key. You can add a primary key using the

following syntax:

mysql> ALTER TABLE tbl_name ADD PRIMARY KEY (column);

Adding a primary key is a table-rebuilding operation. For more information, see Primary Key Operations.

Primary key columns defined with column prefixes are not supported.

Load time is affected if the primary key contains more than one column, or if the primary key column

is not an INTEGER column. The impact on MySQL performance during load, change propagation, and

query processing depends on factors such as data properties, available resources (compute, memory,

and network), and the rate of transaction processing on the MySQL DB System.

• Identify all of the tables that your queries access to ensure that you load all of them into HeatWave.

If a query accesses a table that is not loaded into HeatWave, it will not be offloaded to HeatWave for

processing.

• Column width cannot exceed 65532 bytes.

• The number of columns per table cannot exceed 1017. Before MySQL 8.0.29, the limit was 900.

2.2.2 Loading Data Manually

As of MySQL 8.4.0, HeatWave supports InnoDB partitions. Selectively loading and unloading partitions

will reduce memory requirements. The use of partition information can allow data-skipping and accelerate

query processing.

As of MySQL 8.2.0, HeatWave Guided Load uses HeatWave Autopilot to exclude schemas, tables, and

columns that cannot be loaded, and define RAPID as the secondary engine. To load data manually, follow

these steps:

1. Optionally, applying string column encoding and data placement workload optimizations. For more

information, see Section 2.7, “Workload Optimization for OLAP”.

2. Loading tables or partitions using ALTER TABLE ... SECONDARY_LOAD statements. See

Section 2.2.2.3, “Loading Tables” and Section 2.2.2.4, “Loading Partitions”.

Before MySQL 8.2.0, to load data manually, follow these steps:

1. Excluding columns with unsupported data types. See Section 2.2.2.1, “Excluding Table Columns”.

2. Defining RAPID as the secondary engine for tables you want to load. See Section 2.2.2.2, “Defining the

Secondary Engine”.

3. Optionally, applying string column encoding and data placement workload optimizations. For more

information, see Section 2.7, “Workload Optimization for OLAP”.

4. Loading tables using ALTER TABLE ... SECONDARY_LOAD statements. See Section 2.2.2.3,

“Loading Tables”.

2.2.2.1 Excluding Table Columns

Before loading a table into HeatWave, columns with unsupported data types must be excluded; otherwise,

the table cannot be loaded. For a list of data types that HeatWave supports, see Section 2.10, “Supported

Data Types”.

13

Loading Data Manually

Optionally, exclude columns that are not relevant to the intended queries. Excluding irrelevant columns is

not required but doing so reduces load time and the amount of memory required to store table data.

To exclude a column, specify the NOT SECONDARY column attribute in an ALTER TABLE or CREATE

TABLE statement, as shown below. The NOT SECONDARY column attribute prevents a column from being

loaded into HeatWave when executing a table load operation.

mysql> ALTER TABLE tbl_name MODIFY description BLOB NOT SECONDARY;

mysql> CREATE TABLE orders (id INT, description BLOB NOT SECONDARY);

Note

If a query accesses a column defined with the NOT SECONDARY attribute, the query

is executed on the MySQL DB System by default.

To include a column that was previously excluded, refer to the procedure described in Section 2.4,

“Modifying Tables”.

2.2.2.2 Defining the Secondary Engine

For each table that you want to load into HeatWave, you must define the HeatWave query processing

engine (RAPID) as the secondary engine for the table. To define RAPID as the secondary engine, specify

the SECONDARY_ENGINE table option in an ALTER TABLE or CREATE TABLE statement:

mysql> ALTER TABLE tbl_name SECONDARY_ENGINE = RAPID;

mysql> CREATE TABLE orders (id INT) SECONDARY_ENGINE = RAPID;

2.2.2.3 Loading Tables

To load a table into HeatWave, specify the SECONDARY_LOAD clause in an ALTER TABLE statement.